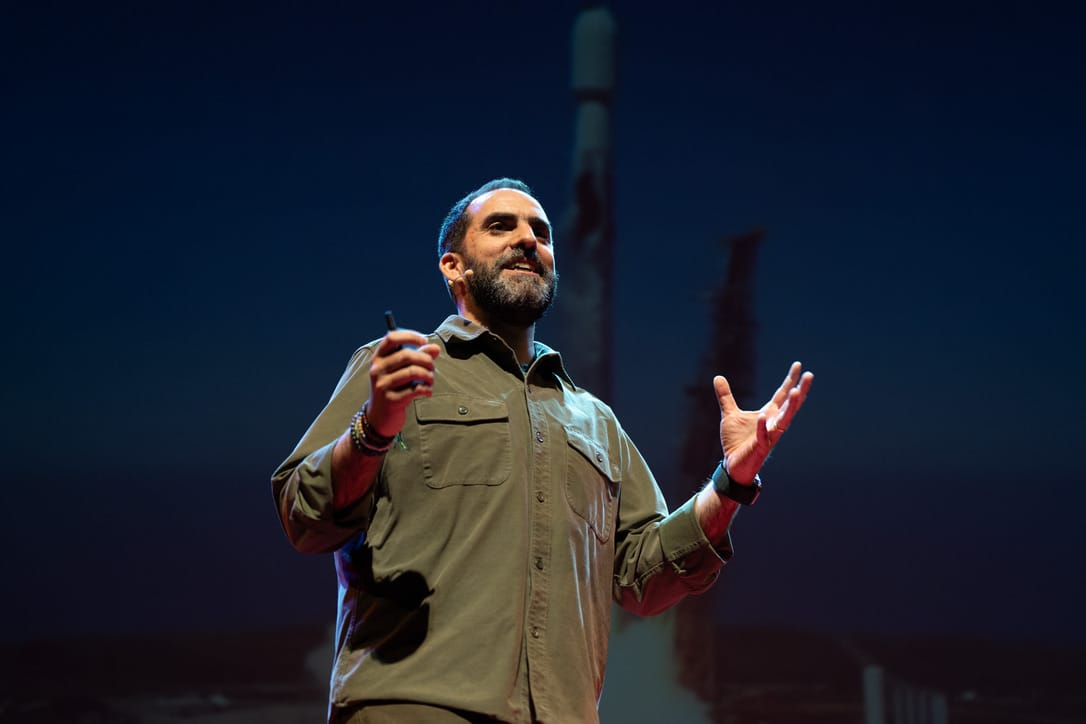

Who Are We Training?

We're training machines to think faster, when we should be training humans to think deeper.

Training means repetition with purpose — how potential becomes capability.

For centuries, we trained humans. Now, we train machines. And while one learns to understand, the other only learns to predict.

The imbalance

Artificial Intelligence training now absorbs a vast share of global investment — hundreds of billions of dollars a year, projected to approach 1 % of GDP in advanced economies within this decade.

Meanwhile, public spending on early childhood education — the stage that defines how human reasoning develops — remains below 0.8 % of GDP on average across the OECD, and closer to 0.3 % in Chile.

We are dedicating more to teaching machines how to think than to teaching humans how to reason, building more data-centers than pre-schools.

The reasoning gap

Even after all that spending, the most advanced AIs still struggle to reason like a child.

They process immense data, but don’t truly understand cause and effect, nuance, or intent.

And yet, the real seven-year-olds of the world still attend underfunded classrooms — often without access to quality early learning or emotional development.

The coincidence is striking: as AI investments accelerated after 2012, early literacy and numeracy gains stagnated across much of the OECD.

Correlation isn’t causation — but it is a warning.

We’re perfecting data training while neglecting human understanding.

What training means

For machines, training means adjusting probabilities to reduce error.

For humans, training means experience, empathy, and curiosity.

One builds prediction; the other builds wisdom.

But machines also learn unsupervised — finding patterns without guidance.

They extract meaning from whatever data they encounter, even when that data is incomplete, biased, or false. Left unsupervised, they amplify our distortions.

The same happens with humans.

When we stop investing in education, minds don’t stop learning — they just learn from whatever surrounds them: misinformation, inequality, distraction.

Neglect produces bias in both code and culture.

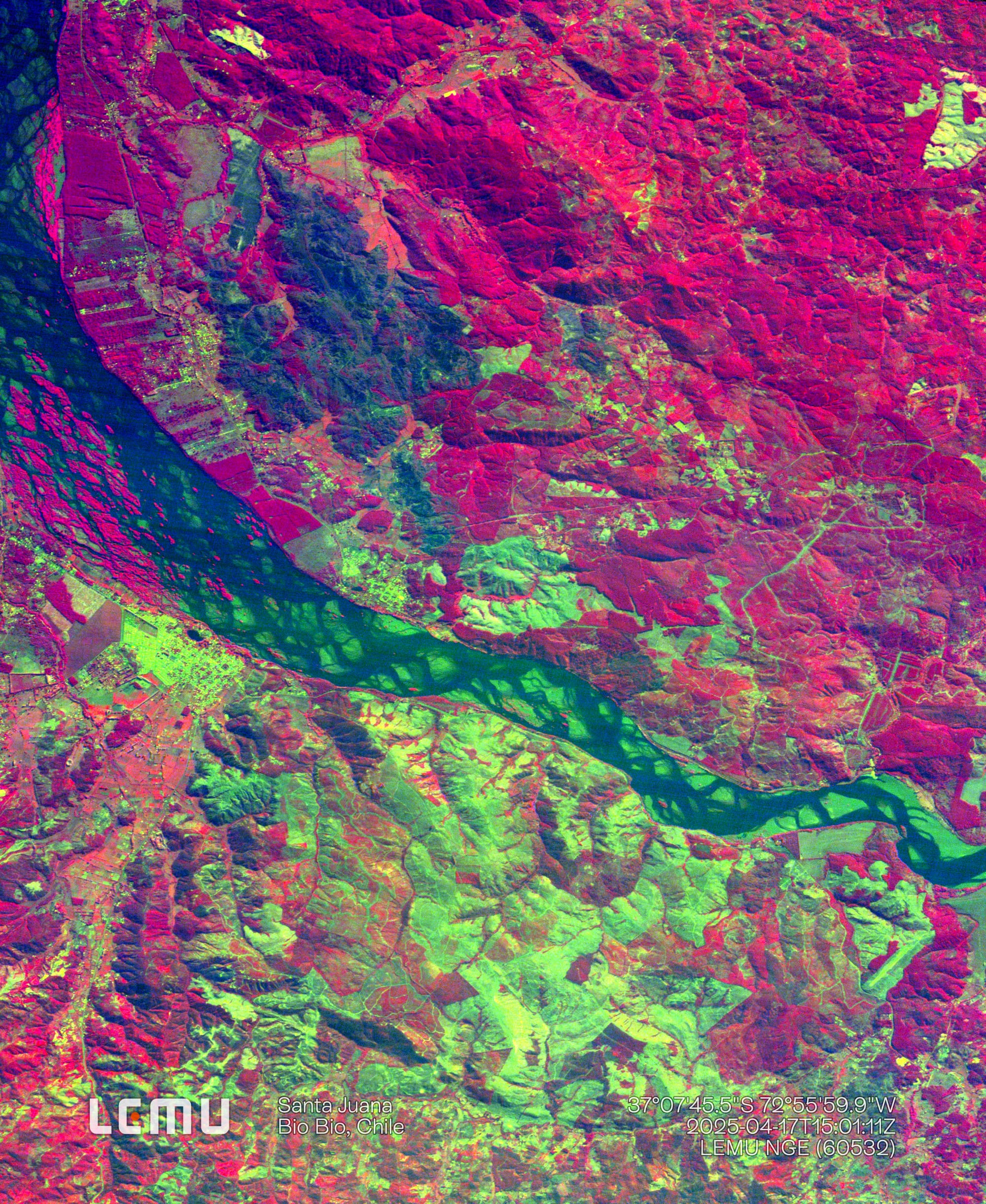

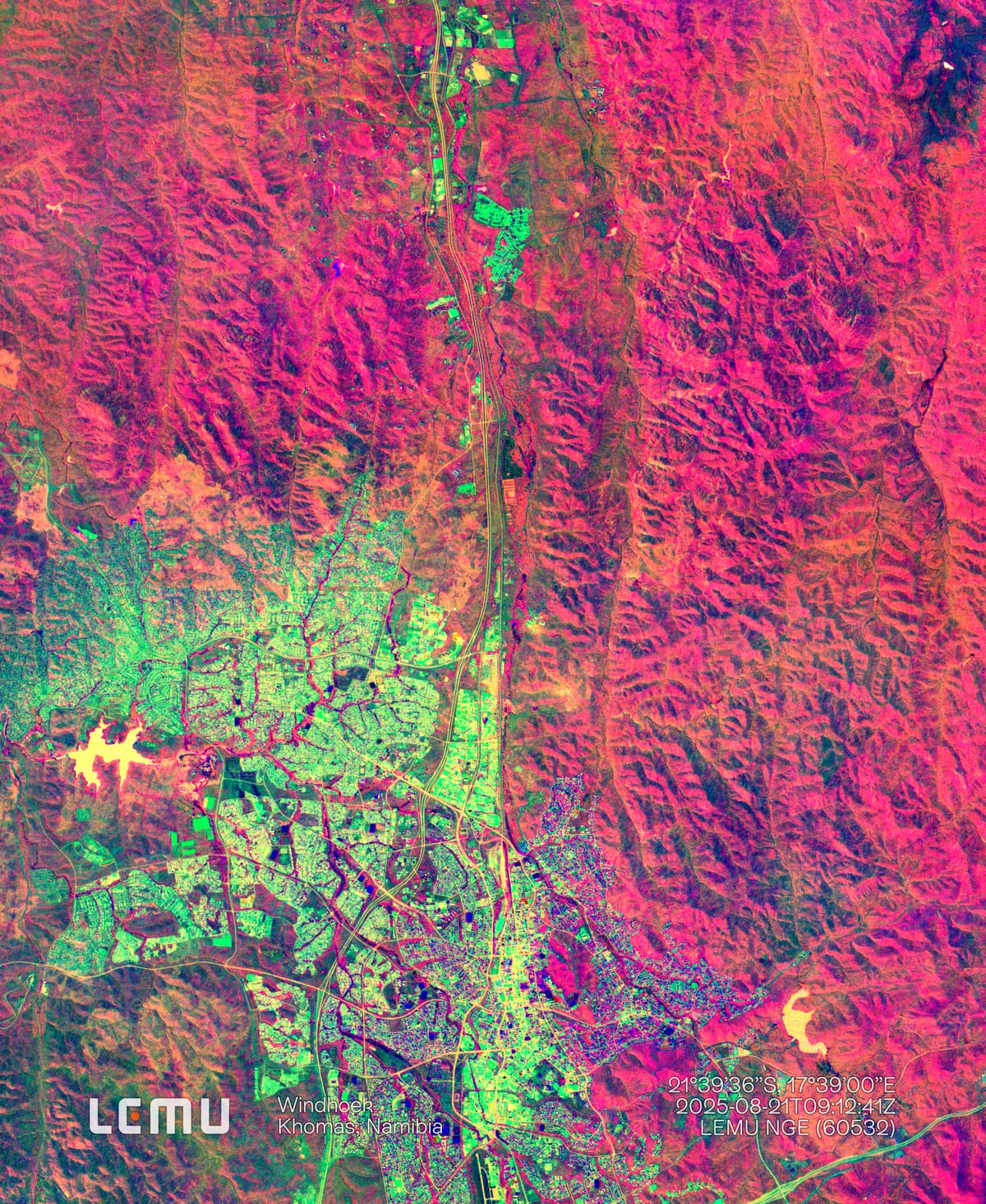

The foundations we’re forgetting

Early education is where reasoning, language, and empathy take root — but also where we learn how the world works.

We should be teaching every child the natural cycle:

where things come from, what we take from nature, and what we give back.

That understanding teaches self-sustenance and self-esteem — that anyone can grow food, repair tools, or regenerate what they consume.

From there, we learn how societies organize within that ecosystem: the roles we each play, and how collaboration allows life — and civilization — to endure.

Education must train humans to see themselves as part of nature, not apart from it.

The illusion of progress

Automation may make life easier, but without comprehension it makes it emptier.

If we keep training machines while untraining humans, we’ll accelerate into ignorance at digital speed.

No algorithm can teach meaning.

That still requires teachers — and time.

The real priority

If even a fraction of today’s AI budgets were redirected toward early human education, the impact would be transformative.

Better classrooms, better teachers, and citizens capable of understanding both technology and the planet it depends on.

Because the future won’t belong to the most automated society.

It will belong to the best-educated one.